How to Predict the Future in Foreign Policy

By: Dr. Thomas Scherer | November 25, 2025

TABLE OF CONTENTS

Introduction

Success in foreign policy depends on the ability to foresee and prepare for events, such as the U.S. government’s anticipation of Russia’s invasion of Ukraine. Inversely, foreign policy failure is often rooted in being caught off guard by events, such as the Biden administration’s failure to anticipate how quickly the Afghan government would collapse.

Understanding the future is a central task of foreign policy. Policymakers and analysts are regularly confronted with questions such as: What is the adversary’s next move? Will our ally support this action? Which new threats, trends, or technologies must we prepare for? And, perhaps most importantly, how do we expect our policies to shape the future? Every policy and strategy is a claim about how the future will transpire.

A large industry has developed around the field of foreign policy to advance forecasting. The names and methods of this work are varied: forecasting markets, scenario planning, tabletop exercises, risk management, early warning systems, red teams, futurism, geopolitical intelligence, and more. Many of these structures are being experimented with inside and outside of government, some with seeming great success.

Less well known is how well these tools actually work. Fortunately, scholarly research into future studies has grown exponentially in the last twenty years, though the vast majority of forecasting research is on non-foreign policy topics such as weather and the economic demand for goods and services.1 This report is designed to give a foreign policy audience an overview of common approaches to understanding the future. It describes each approach, reviews the recent research, and identifies critical knowledge gaps for future research. For this report, I reviewed over 100 books and articles with an emphasis on meta-reviews. I used broad reviews of future studies to identify the main methodological categories and then conducted focused searches of recent publications (within the last 20 years) on each category.

Readers will find in this review both good news and bad news. The good news is that there is strong evidence that certain tools are effective at improving one’s ability to understand the future. Even highly trained intelligence analysts have been outdone by new methods of forecasting.2 The bad news is that much of the literature remains immature, and even the most optimistic studies counsel humility in the face of the vast uncertainty inherent in international affairs. Nevertheless, in the competitive world of national security, where a single decision can have massive consequences, improving the predictive accuracy of policymakers by small margins could significantly shift geopolitical fortunes.

This report groups research into four categories according to the underlying techniques used to understand the future: Human Judgment Forecasting, Quantitative Prediction, Horizon Scanning, and Foresight. These categories are intended as broad summaries, as many experts and organizations describe their efforts with divergences in terminology and methodology.3

I define my categories as follows:

Human Judgment Forecasting involves developing highly accurate human predictors by identifying talent, training skills, and refining the prediction process.

Quantitative Prediction involves using datasets and statistical methods to model relationships between variables and extrapolate them to make predictions.

Foresight involves scanning for signals and trends of possible futures and then working through scenarios based on those futures to improve planning in the present.

Human Judgment Forecasting

How Does it Work?

Human Judgment Forecasting methods are focused on improving forecasts by identifying the personalities, skills, and techniques that lead to greater accuracy. Research has found that some people are naturally better forecasters, and some are superforecasters, consistently out-predicting other experts. Research has also demonstrated that forecasting is a skill that can be trained and improved. It turns out that reading the crystal ball is more science than art.

Much of our understanding comes from forecasting tournaments, where hundreds of individuals compete to answer questions about the future with the highest accuracy.4 A typical tournament might ask forecasters thousands of questions, and then score the accuracy of their predictions in order to rank participants. Researchers can then identify the characteristics associated with the highest-scoring individuals or teams.

Crucial to this effort is the ability to evaluate whether predictions are right. This requires resolvable questions, meaning that they have clear, observable criteria and a deadline. For example, “Will more than 100,000 people be killed in the Russo-Ukrainian War in 2024?” is a resolvable question, whereas “What’s going to happen in the Russo-Ukrainian war?” is not. Typical questions query when something will happen, how many/how much of something will occur, or those that invite a simple yes-or-no answer. Given that the questions are necessarily about the future, a tournament’s results can only be tabulated once reality has provided the answer for each question. For instance, if a question invites one to predict the result of an election in November 2028, it can only be resolved once that date has passed.

Forecasters not only offer their prediction, but also their level of uncertainty. Predicting something will occur with 90% confidence, and ultimately being proven right, will garner a better score than correctly predicting something will occur with 80% confidence. Similarly, registering a prediction with 100% confidence – but being proven wrong – will be very costly to a forecaster’s score. The exact details of how predictions are made and scored vary, but typically, predictions closer to the actual outcome score higher.

Confidence is a useful characteristic in forecasting tournaments because it mirrors the methodology prescribed by the intelligence community. Intelligence Community Directive 203 requires that all analysis “Properly expresses and explains uncertainties associated with major analytic judgments,” using specific expressions of uncertainty (Figure 1).5

Figure 1: Required uncertainty terminology in the Intelligence Community.6

What Does the Research Say?

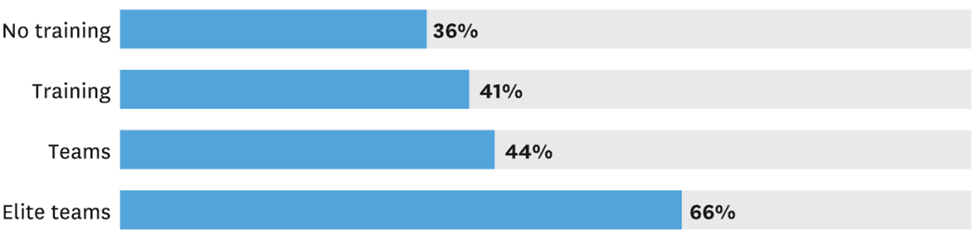

Through a series of forecasting competitions and experiments, researchers have generated a growing body of evidence validating the strength of this approach and providing useful insight about how to improve human judgment forecasting. One study tracking forecasts of geopolitical events suggested that while untrained forecasters were 36% more accurate than random guessing, forecasters with just one hour of training were 41% more accurate (Figure 2).7

Figure 2: Percent increase in forecaster accuracy over random guesses with training and teams.8

Training

Better forecasting can be taught. Training in probabilistic reasoning, especially the use of comparison classes and base rates, improves accuracy.9 The impact of this training can be seen in how forecasters use comparison classes and dialectical complexity in their reasoning, as demonstrated by their use of terms like ‘however,’ ‘yet,’ ‘unless,’ and ‘on the other hand.’10 One experiment found that just even a half-hour lesson on forecasting improved scores.11

Forecasters who were asked to consider analogies in a structured way are more accurate than those who weren’t (46% accuracy vs. 32%). Accuracy improved further when forecasters thought of multiple analogies or analogies that they had direct experience with (60%).12 If left to their own devices, participants avoided the analogy technique because it was more onerous and cognitively challenging.

Accuracy also improves with practice. One study found that forecasters who scored lower on cognitive tests and had less accurate forecasts showed greater improvement over two years, narrowing the gap between them and the top forecasters.13 Feedback that allows forecasters to learn has been repeatedly shown to improve forecasts.14

Education and Experience

More education and work experience improve forecasting, but only to a point. People who demonstrate greater knowledge of current events have higher forecasting accuracy.15 Working professionals make more accurate predictions than undergraduate students, but having a PhD offers no additional improvement.

Surprisingly, more years of experience does not further improve predictions among working professionals. Most surprisingly, professionals with specific expertise in a region did not perform better on predictions about that region. People with access to classified information also performed no better than outsiders.16

Cognitive Style

Research suggests that open-minded forecasters tend to be more accurate. In Expert Political Judgment, Philip Tetlock found that the best forecasters enjoy questions that can be answered in many ways and give serious consideration to each possible answer. They eschew grand theories that promise to explain everything, preferring flexible ‘ad hocery’ that stitches together lessons from a wide range of world views. They succeed because they are more open to dissonant counterfactuals, more willing to update their beliefs, and less susceptible to hindsight bias.17

Further, forecasters who scored higher on a test for open-mindedness (i.e., those who find value in considering opposing arguments and changing beliefs) made better predictions.18 More accurate forecasters spend more time on a question, suggestive of greater deliberation, and make more prediction attempts on one question, likely updating their beliefs with new information.19 Better forecasters also scored higher on cognitive tests that assess fluid intelligence and the ability to resist seemingly obvious but incorrect answers.20 The findings related to open-mindedness have been challenged, however. Another study found that whether a forecaster self-reported open-mindedness was not related to accuracy.21

Belief System

Forecasters with moderate beliefs tend to perform better than their ideological counterparts. Across dimensions of right-left (faith in free markets), realist-institutionalist (centrality of power in foreign affairs), and boomster-doomster (optimism about global economic growth), those in the middle of each of these three dimensions were more accurate than those on the extremes in either direction.22

Teamwork

Groups generally perform better than individuals in forecasting.23 Even high-performing forecasters show greater accuracy when placed in groups. The best-performing groups are more decentralized, with egalitarian participation; the benefits of teamwork dissipate within a top-down decision-making structure. High-performing groups also gave longer reasons for a forecast with more explanations, and their discussions were more likely to include words associated with good teamwork and analysis.

Groups that discuss forecasts and interact more show greater improvement from working as a group. Groups that receive teamwork training display more of the collaborative behaviors that help unlock the potential gains from working together.24

Teamwork does not always improve forecasts. The benefits of teamwork are vulnerable to overconfidence, as overly confident team members can unduly influence the group’s decision. Teams are most beneficial when each member’s confidence matches their accuracy.25

Optimism can also erode the benefits of teamwork. Teams are as susceptible to optimism bias as individuals.26 Teams tend to make riskier decisions as more cautious individuals fear being seen as the group’s pessimist.27 The gains from teamwork disappear if the group is given an overly optimistic starting point.28

Incentives

Forecasters can be incentivized to be more accurate. Forecasters perform better when they are told they will be evaluated on their accuracy, versus when told they will be evaluated on their process.29

Prediction markets are open platforms that allow bets on future events. The market crowdsources people’s bets on the probability that an event will occur and aggregates the averages into the market price. This is similar to stock trading, except buying low is betting on an event that others believe to be unlikely, and one’s “shares” grow more valuable as more people agree with your bet. Prediction markets with monetary stakes perform slightly better on average than markets that operate with fake money. Research has largely found that predictive markets perform similarly to other human forecasting methods. In an experiment where forecasters were assigned to individual predictions, team predictions, or prediction markets, markets performed better at the start of short-term questions, while team predictions were better at longer-term questions.30 Many of these experiments were completed on private, low-stakes prediction markets; public large-stakes markets raise the prospects of price manipulation and insider trading.31

The Delphi Method

The Delphi Method is a structured process to elicit human judgment forecasting from a group while minimizing the pathologies of group decision-making.32 Named for the Delphic oracle of Greek mythology, RAND developed this method in the 1950s to forecast the effects of technology on warfare.33

The method begins with a group of forecasters making predictions independently. The coordinators collect the predictions and then provide each forecaster with an overview of the results, including the group’s average. The coordinators may also share anonymous justifications that forecasters provided with their predictions.

Next, the forecasters are allowed to update their initial predictions. The results are again shared with the group. This process is repeated until the forecasts stabilize, or until the forecasters reach a consensus.34 Supporters of the Delphi Method explain that the key features are anonymity to free the individuals of any societal influence, feedback to expose the individuals to potentially differing views, and iteration to allow the feedback to have an impact.35

The Delphi method improves forecast accuracy, but its positive effects are similar to other group structures, most of which are faster and take less effort.36 Further, research on the Delphi Method is outdated and weakened by inconsistencies in its execution.37 Differences in who participates, what feedback is given, and how predictions are tabulated affect the outcome and make evaluation difficult.38 The RAND Corporation has published 50 studies using the Delphi method in the last ten years, and recently developed the Delphi Critical Appraisal Tool to assess the quality of Delphi efforts, creating the potential for a reevaluation of the Delphi Method.39

Quantitative Support

An experiment during an Intelligence Community-sponsored forecasting competition found that giving human forecasters statistical model-based predictions only improved the accuracy of some of the forecasters, presumably those who knew when and how to use those predictions.40 Another forecasting experiment provided human forecasters with high-quality advice from either humans or an algorithm. Among the forecasters that received advice from an algorithm, those considered experts – i.e., those with national security experience - were more likely to ignore the advice and were ultimately less accurate than the non-experts.41 The quality of the predictive algorithm obviously matters; the next section dives deep into the details of quantitative predictions.

Hybrid Prediction

Another way to use statistical forecasts with human judgment forecasting is to combine them by taking their average.42 This method is sometimes called the Blattberg-Hoch approach, who argue that “any combinations of forecasts prove more accurate than the single inputs.”43

This method can be especially effective in cases when human judgment is likely to stray far from the pattern predicted by statistical forecasts.44 Two studies provided experts with quantitative predictions and had them make adjustments. In both cases, the average of the quantitative prediction and the human prediction outperformed each on its own. Weighting the two guesses equally proved to be optimal on average.45 It is worth noting that a core precept of good forecasting technique is to begin one’s analysis by evaluating historical patterns predicted by statistical forecasts, called the base rate.46

Artificial Intelligence

Forecasters have begun using AI to compete in forecasting competitions with some success. An aggregate of 12 Large Language Models, including ChatGPT 4 and Claude 2, scored similarly to the human crowd in Metaculus’ Fall 2023 competition.47 The models are programmed to forecast using the same structured techniques as superforecasters. In Metaculus’ Fall 2025 forecasting competition, the eighth-place finisher was ManticAI, an artificial intelligence.48 There were caveats to this success. The human competitors were largely amateurs, and AIs have an advantage just by making predictions early and often.49 Indeed, human superforecasters still outperformed top-performing AIs.50 There is some evidence that human-forecasting can be improved when paired with an advanced AI agent.51

There are two major challenges for AI forecasts in geopolitics: data scarcity and human unpredictability.52 Where those challenges are overcome, in weather forecasting, for example, AI is starting to match and even outperform some major forecasting models.53

Quantitative Prediction

How Does it Work?

Quantitative prediction uses statistical methods to find patterns in past data and extrapolate them into the future. The human brain may be capable of comparing and contrasting between two similar cases – the wars in Vietnam and Iraq, for instance – but computers are required to compare across many cases. The challenge is that one needs machine-readable information (data) to describe each variable of interest for each case.

The first step in quantitative prediction is to identify the outcome one aims to predict, such as whether a particular country will experience a severe civil war. Next, one considers the factors that might predict this outcome. To continue with the civil war example, one might consider government type, economic development, dependability of public services, whether it has recently experienced conflict, and other factors suggested by previous research. One would then collect historical data on each of these factors. Data in hand, one then conducts a statistical analysis to identify the relationships between those variables. The civil war analysis may show that across many different wars, as the size of opposing armies or the amount of third-party support increases, the severity of the civil war also tends to increase.

The statistical analysis helps one create a model, an equation that best predicts the outcome based on the input variables. One develops a model by choosing the functional form (e.g., linear regression) and the variables to include. These are important choices that can have a significant impact on one’s predictions.54 As it is often unclear beforehand which model specification is best, modelers can create multiple models and assess their performance over time.

The last step, once a model has been selected, is to apply the model to the input data to predict the outcome. While one can predict an outcome that will happen in the future, one still needs existing input data. Often, models use inputs from the current time period to predict outcomes for the next period. For example, a model trained on data ending from 2023 may make predictions for 2024.

An IARPA forecasting competition found that poor data quality on some topics resulted in extreme and unhelpful predictions.55 High-quality quantitative prediction depends on the availability of data that is complete, accurate, and relevant. These three qualities are important, and I offer brief definitions here:

Completeness refers to how well the data covers the full population or period. Rarely does one have access to every single data point. Instead, datasets are typically representative samples of the concept one is interested in. If data is collected non-randomly and thus exclude some parts of the population (called selection bias), the sample is not representative, and the quality of its forecasts will be harmed. For example, if data on diplomatic meetings is only drawn from publicly available news sources, secretive diplomacy will be excluded from the dataset. Such a dataset would be systematically biased, and any analysis drawn from that data would likely be misleading.

Accurate datasets are able to successfully measure reality. Faulty measurements lead to less accurate data. For instance, a database of deaths in a civil war based on participant reports may be less accurate than data gathered from morgues or reported by trusted IGOs and NGOs.

Relevant data refers to how useful the data is for predicting the outcome, sometimes called its predictive power. There are two dimensions to relevance. First, does the data capture the desired concept? For example, to measure ‘civil war intensity’, battle casualties seems a better measure than threats on social media. Second, is the concept represented by the data useful to predicting the outcome? Relevant concepts are usually identified from theories supported by past research. For instance, theories of civil war duration suggest we are better off relying on data on the number of weapons in a country rather than the number of computers.

It is important to recognize that quantitative models are not perfect reflections of reality. Measurement in the field of foreign policy requires the simplification of infinitely complex social realities that defy clean definitions. Simplification does not make a model useless. Models are intended to be parsimonious. One simply must understand the choices and tradeoffs made to produce the model.

What Does the Research Say?

The online stock trading commercials are right: past performance does not guarantee future results, but quantitative predictions can still be quite helpful.

The recent improvements in computing power, data availability, and quantitative methods have led to a rise in quantitative prediction efforts. In the field of international conflict, efforts such as the Political Instability Task Force (PITF), Integrated Crisis Early Warning Systems (ICEWS), Violence & Impacts Early-Warning System (VIEWS), and the Early Warning Project (EWP) demonstrate the value of quantitative prediction and offer lessons for improvement.

The CIA set up the Political Stability Task Force (PITF) in 1994 to predict state failure (e.g., due to civil war, a coup, or genocide. The task force developed a model to rank countries by risk. The task force considered over forty variables before settling on four in their final model.56 They trained the model on data from 1955 to 1994 and then ranked countries by their risk of instability every year from 1995 to 2004. In other words, the model predicted the countries most likely to fail. Setting a threshold that those in the top quintile of risk would experience instability, the model correctly predicted 86% of the instances of instability and 81% of continued stability.57

When researchers later reapplied the model to the 2005-2014 period, the model’s accuracy declined over time. It correctly predicted only 35% of instability cases. The immediate lesson is that the drivers of instability change over time – the drivers of instability in the post-9/11 era were different than those in the post-Cold War era. The larger lesson is that predictive models, whether quantitative models or mental models, must evolve over time lest they become outdated and lose accuracy.58

The Integrated Crisis Early Warning Systems (ICEWS) was a Department of Defense-funded project designed to forecast global instability events, including rebellion, ethnic/religious violence, and international crises. The project experimented with several different models and aggregated them based on which countries or events they performed best on. The constituent models included those focused on leaders and society based on subject matter expert surveys, models using events from text-parsing 6.5 million global news stories, and a geo-spatial model considering connections and interactions between neighboring countries. This combination of models reportedly reached over 90% accuracy for predicting every type of instability. It is worth noting that for rare events like rebellion and insurgency, where change is unusual, a model that always predicts a continuation of the previous year, known as a naive model, also achieved over 90% accuracy. The tougher, but more important task, is predicting whether instability will persist. A naive model, by definition, predicts 0% of changes; the best ICEWS model was able to predict 56% of changes.59

VIEWS is a conflict prediction system that ran a competition to predict monthly changes in the intensity of state-based violence, measured by the number of fatalities, at the state level and at the sub-state level using grid cells (Figure 3).60 Compared to PITF and ICEWS, VIEWS challenges forecasters to make predictions at a more granular level: the number of fatalities instead of a binary for instability, for months instead of years, for sub-state grid cells as well as countries. The competition submissions advanced the field with innovative data (e.g., geo-located peacekeeping units, COVID-19 deaths, internet search data) and algorithms (e.g., neural networks, machine learning). The results found that parsimonious models perform at least as well as models with many variables. More importantly, all the submissions were able to beat the benchmark and naive models, demonstrating that investment can lead to predictive gains on even the hardest tasks.61

Figure 3: VIEWS data dashboard of fatality predictions for January 2025.62

The Early Warning Project (EWP) from the Holocaust Memorial Museum estimates the percentage chance of new mass killings in each country for the next two years.63 They conducted an extensive review of scholarly literature to identify the causes of mass killing. They also collect data on demographics, socioeconomics, governance, human rights, and conflict history from 1960 or earlier. They train many models using different algorithms and variables, and they use the last two years of data to identify the most predictive. Predictive variables include civil liberty inequality, population size, a history of mass killing, infant mortality, coup attempts, political killings, battle deaths, and power monopolized by some social groups.64

The EWP advocates that policymakers pay close attention to those countries with the highest risk.65 The EWP’s December 2024 report highlights the top 30 at risk in 2024-2025 (Figure 4).

The EWP shows the importance of quickly gathering data. The EWP wants to use the most recent data, but some of the 2023 data is not ready until late 2024. Since a prediction for 2024 published at the end of 2024 is of little use, the EWP predicts a two-year window to provide policymakers with some information for 2025. In terms of accuracy, since 1994, EWP has demonstrated its predictive power by forecasting a higher risk in countries that experienced a mass killing (8%) than in those that did not (3%).

Figure 4: Early Warning Project's assessment of the 30 countries with the highest risk of mass killing for 2024-2025.66

Human Adjustments

Quantitative predictions can improve human forecasts; can human forecasts improve quantitative predictions? The evidence supporting the Blattberg-Hoch approach of combining quantitative and human predictions applies here, suggesting there are gains from human adjustments. A study of human adjustments to 60,000 forecasts by supply chain companies found that human adjustments increased accuracy on average. The study also found evidence of optimism bias, demonstrating that positive adjustments by humans to the algorithmic estimates tended to be less accurate than their negative adjustments.67

Foresight

How Does it Work?

Foresight is thinking about possible futures and the opportunities and challenges they could bring. Foresight is not about predicting what will happen, but rather about what may happen.68 Foresight considers different plausible futures and the challenges that each entails to help organizations or individuals prepare for any future.69

As a recent review put it, “Foresight cannot predict the future nor reduce uncertainty. What foresight can do is to explore a wide range of possible futures and to engage participants in “what if” exercises to help them deal with uncertainty and better understand possible consequences at the system level and inform their decision-making to be more effective at creating the future they want.”70

The US Office of Management and Budget characterizes foresight as “insight into how and why the future might be different from the present.” It differentiates this from forecasting, “Which seeks to make statements or assertions about future events based on quantitative and qualitative analysis and modeling.”71

The UK Office for Science gives a broader definition: “Foresight refers to the application of specific tools/methods for conducting futures work, for example, horizon scanning (gathering intelligence about the future) and scenarios (describing what the future might be like).” Some descriptions of foresight are directly in line with rational choice theory – trying to better understand the possible outcomes of choices to make a sound decision.72 Similarly, corporate foresight is when private-sector firms identify and interpret factors that are likely to threaten the status quo, and propose options for preparing appropriately.73

Foresight practice is being intentional and systematic about foresight efforts to increase their effectiveness. Exact foresight processes vary, but their steps usually include some form of Framing, Scanning, Hypothesizing, Workshopping, and Broadcasting.

Framing

Framing defines the bounds of the issue or problem to be studied. This could include (but is not limited to) identifying key questions, selecting topics, setting time horizons, or establishing geographic boundaries.74 Narrower framings allow for quicker, cheaper, and/or more detailed analysis, but they also risk missing relevant future challenges that fell outside of the framing.

For example, the US Coast Guard runs a strategic foresight process every four years called Project Evergreen. The framing for Evergreen V, started in 2018, focused on what global and regional changes might mean for the Coast Guard’s operations 15-25 years in the future.75

Scanning

Scanning is the search for signals and drivers that portend impactful trends, surprises, and possible futures.76 Signals are “early whispers of change,” and drivers are “forces that propel and shape change”.77 The National Intelligence Council’s quadrennial Global Trends report is an example of such a scan; the 2021 report reviews trends in demographics, environment, economics, and technology.78

Scanning can target a wide range of sources, including expert interviews, group discussions, sector reports, social media, and mainstream news. A scan evaluating how future technology may change war efforts might collect information from industry associations, conference presentations, military projects, and patent filings. As weak signals can come from unexpected issues, some recommend scanning broadly beyond the immediate subject, for example, by following the PESTLE taxonomy: political, economic, social, technological, legal, and environmental.79 This taxonomy can also be used for Driver Mapping to see what areas are absent from a scan.80

Scans may include tools such as the previously discussed Delphi Method as well as others like System Mapping, Seven Questions, and Three Horizons. System Mapping is creating a visualization of key elements in a system (nodes) and the relationships between them (links) to identify areas of possible change worth scanning.81 Seven Questions is a guided framework for interviews.82 As part of framing and scanning, one should also identify the assumptions held about the topic to be interrogated later.83 Three Horizons is a technique to explore assumptions over three different time horizons.84

Horizon Scanning is a systematic process to detect early signs of important future developments. These efforts are similar enough to Foresight to include here, but adopt some different steps and frameworks. What Foresight calls ‘scanning’, Horizon Scanning may be further subdivided into gathering information, filtering, prioritizing, analysis, and dissemination.85

In 2009, a horizon scan to identify future trends relevant for EU policymaking identified six relevant factors: demography/migration, economy/trade, environment/energy, research/innovation, governance/social cohesion, and defense/security. For each area, experts analyzed 20-25 reports on each area and identified 370 specific issues, such as a global decline in defense spending or radical groups deploying more sophisticated violence. Each of these specific issues was then rated on relevance, novelty, and probability using a survey of 270 external experts. For example, NATO’s increased openness to outside partnerships would be highly relevant. The widespread diffusion of surveillance sensors globally by 2020 was judged to be relevant, likely, and highly novel. A major war by 2020 was judged highly relevant but unlikely.86

Scenarios

The next step is to make sense of and gain insights from the signals and trends. This is typically achieved by creating and discussing scenarios, often in a workshop setting, to identify possible futures and explore what each future would mean for the participants, as well as how they would prepare for it. By imagining themselves in these scenarios, participants identify how the current policies and plans are insufficient for possible futures.87 Scenario analysis has also been used in academic settings to spark overlooked research agendas that may be highly relevant in the future.88

Scenarios can be built around Change Drivers – statements that explain a group of signals – or by taking two axes of uncertainty and using them to create a 2x2 matrix with four possible scenarios.89 A SWOT analysis (Strengths, Weaknesses, Opportunities, Threats) can also inform the scenarios. Visioning, Backcasting, and Roadmapping are approaches that start by envisioning an ideal future scenario and then identifying the steps needed to connect it to the present. Future Wheel is a tool to structure brainstorming around future events and casualties.90 Scenario Stress-Testing is injecting specific situations into a scenario to compare responses.91

Informing

The final step is ensuring that the ideas and insights from the scenarios inform future decisions, policies, and plans. This could include written products that summarize weak signals and drivers of change, possible future scenarios, and patterns of challenges and opportunities.92 Firms and organizations may have existing change management processes to connect the results to, or may set up new steps towards transformation.93

Foresight proponents also argue that participants are informed just by participating. During the scenarios, participants clarify and test their assumptions, develop mental models of the system they operate in and how it can change, and identify future challenges, opportunities, and uncertainties. Importantly, they have done this as a group, thus creating a shared understanding and sense of purpose as they move forward together.94

Decision Making Under Deep Uncertainty

Decision Making Under Deep Uncertainty, also called Robust Decision Making, was developed by scientists at RAND as a decision-making tool (whereas foresight and other prediction methods are analysis tools). Deep Uncertainty is defined as when parties to a decision cannot agree on the likelihood of future events or the consequences of policy choices.95

The goal of RDM is to choose a strategy that is robust, meaning that it “performs well, compared to the alternatives, over a wide range of possible futures.” The procedure leads analysts to frame their problem and goals, and then extrapolate a wide range of plausible futures. The likely effects of any proposed policy responses are then predicted for each of the diverse conditions presented by the many plausible futures. This exploratory modeling generates a map of outcomes that reveals where and why particular strategies might fail. Analysts then identify the vulnerabilities of each strategy through scenario discovery and refine the options by incorporating adaptive elements that can respond to changing circumstances.

For example, an early RDM study on reducing greenhouse gas emissions while supporting economic growth started with two policy choices: whether to have carbon taxes and whether to subsidize low-carbon technologies. It then identified important inputs such as the future impacts of climate change and the cost and performance of solar technology, each of which had several potential values. It ultimately identified thirty total inputs, which it combined to create 1,611 plausible futures.96

In this study, the models showed that it was always better to have a carbon tax. It then looked at which futures supported having a subsidy and found that subsidies were worthwhile except in futures where climate change impacts were small and technology easily diffused. Assigning probabilities to those two inputs, the study concludes that taxes and subsidies are more likely to achieve the policy goals.97

What Does the Research Say?

Evaluation of foresight focuses heavily on outputs such as the number of publications and participant satisfaction surveys, and looks for evidence of impact such as endorsements from decision-makers and references in policy documents. This is also true for horizon scans; an early assessment of the EU exercise reported metrics participant satisfaction, a claim that the recommendations were useful in discussions in the European Commission, and the citation of the final report in the 2010 Communication on the Innovation Union.98

Unlike human judgment and quantitative prediction, the foresight community has shown little interest in evaluating the accuracy of its forays into the future. As one evaluation explains, “foresight cannot predict the future nor reduce uncertainty.”99 The word ‘prediction’ itself is viewed so negatively that even theorizing the effects of foresight practice is discouraged.100 As such, relative to human judgment and quantitative prediction, the research on foresight is underwhelming and often ad hoc.

One of the more interesting studies looked at the foresight needs and practices of 70 firms in 2008 and compared them to their profitability in 2015. Firms with foresight practice levels equal to their needs (termed vigilant) had an average profitability of 16%, compared to 10% in firms with foresight practices one level lower (vulnerable) or higher (neurotic) than their needs (Figure 5). This research suggests that foresight is useful, but only up to a certain point! Too much foresight may be just as costly as too little foresight.101

Figure 5: Average profitability of firms by future preparedness

levels.102

When large firms were asked about the benefits of their strategic foresight practices, approximately one-third confirmed that foresight led to insights into changes in the environment (35%), helped understand the market (31%), and aided in understanding customer needs (30%). Fewer affirmed that their foresight practices identified opportunities and threats to their portfolio (26%), reduced uncertainty (25%), created the ability to adopt alternative perspectives (20%), facilitated organizational learning (17%), and reduced uncertainty in R&D (11%). Top performing firms report more value from their strategic foresight than low performing firms.103 All of this data is self-reported and thus must be taken with a grain of salt.

A case study of a scotch whiskey manufacturer found that a series of scenario workshops helped the management team face operational realities and let go of tightly-held assumptions that had served them poorly.104

Some research in this space is suspect. One team of researchers surveyed 66 major firms and concluded that the use of foresight practices is positively associated with the use of foresight in a firm’s strategy process.105 If that sounds circular, that’s because it is, like claiming “the patients who received the penicillin treatment were overwhelmingly found to have penicillin in their bloodstream.” Research design should always be scrutinized, especially in foresight studies where some researchers work as corporate foresight consultants and have a strong incentive to find foresight beneficial.

Foresight is already common in the US government. In 2018, 18 federal agencies ranging from CIA to FEMA to Office of Personnel Management reported using at least one foresight method, and more have risen since.106 In 2022, the US State Department set up a Policy Risk and Opportunity Planning Group to conduct strategic foresight and proactive policy planning.107 Its creation was spurred by State’s after-action review of the Afghanistan withdrawal, which recommended better planning for worst-case scenarios.108 The Office of Personnel Management has a guide for how all Federal agencies can implement foresight.109

The US Coast Guard began cyclical scenario planning exercises starting with Project Long View in 1998 and continuing with Project Evergreen since 2003. A 2021 review found that over the effort’s history, over 1,000 personnel have participated.110 Participants reported bringing the strategic foresight perspective back to their station and their colleagues. Some said the exercise allowed them to think more about the future and the bigger picture in their daily activities. Some of the planning from the 1998 session allowed the Coast Guard to adapt rapidly to a post-9/11 reality.111

One might be especially skeptical when foresight programs are a one-off and not incorporated into existing planning processes. In case studies of foresight exercises by Dutch provinces and municipalities, researchers struggled to find a connection between the exercises and subsequent policy analysis and strategy. Researchers found that inexperience, poor timing, organizational culture, and lack of leadership hindered any impact. Interviews with national ministers similarly found room for improvement in connecting foresight studies to decision-making.112

When participants in the 1997 New Zealand Health Sector Scenarios were asked twenty years later about impact, they said that the scenarios were discussed for a short time but quickly forgotten. One participant suggested scenario thinking would take years to properly embed.113

There is evidence that foresight programs affect the opinions of the participants. In scenario workshops on infrastructure investments, participants changed their opinions on certain investments depending on how useful the investments were in their scenario.114 It just isn’t clear whether those changes have a greater impact.

A scenario workshop in Mali centered on what local agriculture should look like in 15 years. A year after the workshop, 92% of participants agreed that it helped them build relationships, 65% said it enabled them to learn from different perspectives, 62% credited it with bringing about novel changes in their behavior, and 15% developed a deeper understanding of systems. However, the researchers concede that the behavior may not have been novel or attributable to the workshop. They conclude, “there was only debatable evidence of transformational change amongst individuals … and no obvious alterations to policies or policy-making norms.”115

Of the 12 studies on foresight outcomes included in a recent review by the Association of Professional Futurists, two examined the effects of foresight training at the individual level.116 One found that university students who had taken a futures course were more likely to agree to statements related to openness to alternatives than their peers, suggesting the class may have positively affected their ability to make good decisions. But the same study found that the students showed no difference on statements related to long-term thinking and were less likely to agree on concern for others.117 The other only examined whether students engaged with the material and liked the course.118 Such results make clear conclusions difficult.

Foresight’s Resistance to Evaluation

The Association of Professional Futurists reported, “Despite 40+ years of scholarship on the benefits of evaluation and its application in actual foresight initiatives in the peer review literature, the field has been very slow to assess the quality and impact of its work.”119

One review summarizes some of the key challenges. “Foresight evaluation needs to address specific challenges such as complexity, long-time horizons, the qualitative nature of the work, and documenting largely intangible and multiple foresight outcomes. However, these challenges are not unique to foresight work.”120

Other reviews find that mainstream foresight research lacks theoretical frameworks, weakening its contribution to scientific knowledge.121 The scenario literature is dominated by case studies written by advocates trumpeting their positive outcomes, which is useful for advocacy but less so for knowledge building.122 The field’s resistance to scientific theory is exacerbated by apparent misunderstandings of what theory and scientific assessment are and outright rejection of mainstream scientific research.123

Another hindrance is the “cult of personalities” around foresight practitioners who, in the words of one scholar, resist scientific theory and method as potential threats to their professional identity. Based on their experience in the foresight community, they conclude, “Efforts to persuade practitioners to adapt their processes and practices on the basis of scientifically sound theory and research, without first addressing more directly the inherently cognitive-affective, social, cultural, and political dimensions of their work, will ultimately fail to achieve the desired outcomes, to the mutual detriment of science and practice.”124 Another scholar believes that to advance foresight as a science, scientists must challenge and beat out non-scientists in the marketplace of ideas.125

Conclusion

This report reviewed a large tranche of research related to several prominent methods for understanding and predicting the future. Recent developments in human forecasting evaluation and quantitative methods have led to an uptick in research on how these methods can best predict future events.

The accuracy of a given method varies greatly with how well it is implemented. Human forecasting and quantitative predictions have had significant advances in identifying when each will perform better and worse, thanks to their focus on evaluation. Before other methods are discarded for poor performance or lack of evidence, it should be clear why they performed poorly and whether there are any simple remedies.

This review identified several significant gaps in our understanding of forecasting and prediction methods. Studies have repeatedly shown how the details of when and how a method is applied is crucial to understanding its usefulness. While this review highlighted studies used in a foreign policy setting, the vast majority of forecasting applications and the resulting lessons are from other domains, mostly in the private sector. Lessons drawn from those domains should be re-evaluated as they are applied to foreign policy to assess their validity.

Foresight has not had a similar boost and currently has little evidence beyond the anecdotal to support its added value beyond commonplace planning practices. This is not to say that foresight is not worth its cost in time and effort. The meager evidence that does exist suggests that foresight may be more effective with leadership buy-in, participant familiarity, and embedding it in the policy process. This is partly because accuracy isn’t necessarily the goal for foresight. But without clear measure of effects and outcomes, we are left with no approach to assessing the benefits of these methods. Additional work to develop and test this class of methods is welcome.

Ultimately, the biggest question is whether better predictions lead to better policy, and to better outcomes in general. This is the classic problem of focusing on outputs – accuracy – when we really care about better outcomes. The question we care most about is the extent to which improved forecasting and prediction can improve the effectiveness of a policy’s impact in the real world. It is generally assumed that a more accurate prediction will cause better outcomes. This is a strong assumption that may not be true. For example, in a follow-up review of the forecasting tournament sponsored by the Intelligence Community, scholars found virtually no evidence that even the most accurate forecasts were used in intelligence products, let alone by policymakers.126

Like the Greek myth of Cassandra, knowing the future but being cursed to be ignored is unhelpful. An undertheorized question that emerges from this research is: How does one integrate futurism into the decision process? fp21 has previously described four possible models for integrating forecasting into policymaking (Figure 6).127 Future publications will need to examine how these methods can support policymakers in the policymaking process.

Figure 6: Four Models for Integrating Forecasting into Policymaking.128

The tools and techniques reviewed in this report carry important implications for every stage of the decision-making process. However, further research is needed to understand exactly how these tools can be deployed. One study found that decision-makers are more likely to avoid worst-case outcomes when analysis is presented with an emphasis on worst case scenarios versus an emphasis on most-likely scenarios.129 Others propose combining scenarios with clusters of human judgment forecasts that give a sense of how likely each possible future is.130 This sort of research into blending-techniques to inform decision-making, especially in the foreign policy space, remains limited but presents exciting opportunities.

Finally, there is almost no discussion about the costs associated with each method. While evidence suggests that predictive forecasting is more effective than human judgment in many settings, it may still be impractical for an organization to implement if it requires training or costly data collection. Foresight can also take significant resources.131 According to the Canadian government’s foresight center, a foresight study on a complex issue can take 2 to 12 months.132

The research shows that forecasting and prediction methods have improved to where it would be irresponsible to ignore them. However, it also suggests that care should be taken in their application and that they should be continuously evaluated and adapted. The future is indeed a moving target; our policy processes must move with it.

Acknowledgements: The author wishes to thank Dan Spokojny for significant contributions in conceptualization, writing and editing. Additional thanks to Gary Gomez and Darrow Godeski Merton for their thoughtful comments.

End Notes:

[1]: Zellner, M., Abbas, A. E., Budescu, D. V., & Galstyan, A. (2021). A survey of human judgement and quantitative forecasting methods. Royal Society Open Science, 8(2), 201187. https://doi.org/10.1098/rsos.201187

[2]: Tetlock, P. E. (2005). Expert Political Judgment: How Good Is It? How Can We Know? (REV-Revised). Princeton University Press. https://www.jstor.org/stable/j.ctt1pk86s8

[3]: Ægisdóttir, S., White, M. J., Spengler, P. M., Maugherman, A.

S., Anderson, L. A., Cook, R. S., Nichols, C. N., Lampropoulos, G. K.,

Walker, B. S., Cohen, G., & Rush, J. D. (2006). The Meta-Analysis of

Clinical Judgment Project: Fifty-Six Years of Accumulated Research on

Clinical Versus Statistical Prediction. The Counseling

Psychologist, 34(3), 341–382. https://doi.org/10.1177/0011000005285875; Grove, W. M.,

Zald, D. H., Lebow, B. S., Snitz, B. E., & Nelson, C. (2000).

Clinical versus mechanical prediction: A meta-analysis.

Psychological Assessment, 12(1), 19–30. https://doi.org/10.1037/1040-3590.12.1.19; Kuncel, N.

R., Klieger, D. M., Connelly, B. S., & Ones, D. S. (2013).

Mechanical versus clinical data combination in selection and admissions

decisions: A meta-analysis. Journal of Applied Psychology,

98(6), 1060–1072. https://doi.org/10.1037/a0034156; Zellner et al., A

survey of human judgement

[4]: Tetlock, P. E., Mellers, B. A., & Scoblic, J. P. (2017). Bringing probability judgments into policy debates via forecasting tournaments. Science, 355(6324), 481–483.

[5]: Director of National Intelligence. (2015, January 2). https://www.dni.gov/files/documents/ICD/ICD-203.pdf

[6]: Director of National Intelligence. (2015, January 2). https://www.dni.gov/files/documents/ICD/ICD-203.pdf

[7]: Schoemaker, P. J. H., & Tetlock, P. E. (2016, May 1). Superforecasting: How to Upgrade Your Company’s Judgment. Harvard Business Review. https://hbr.org/2016/05/superforecasting-how-to-upgrade-your-companys-judgment

[8]: Schoemaker, P. J. H., & Tetlock, P. E. (2016, May 1). Superforecasting: How to Upgrade Your Company’s Judgment. Harvard Business Review. https://hbr.org/2016/05/superforecasting-how-to-upgrade-your-companys-judgment

[9]: Chang, W., Chen, E., Mellers, B., & Tetlock, P. (2016). Developing expert political judgment: The impact of training and practice on judgmental accuracy in geopolitical forecasting tournaments. Judgment and Decision Making, 11(5), 509–526. https://doi.org/10.1017/S1930297500004599; Kahneman, D., & Tversky, A. (1973). On the psychology of prediction. Psychological Review, 80(4), 237; Mellers, B., Stone, E., Murray, T., Minster, A., Rohrbaugh, N., Bishop, M., Chen, E., Baker, J., Hou, Y., Horowitz, M., Ungar, L., & Tetlock, P. (2015). Identifying and Cultivating Superforecasters as a Method of Improving Probabilistic Predictions. Perspectives on Psychological Science, 10(3), 267–281. https://doi.org/10.1177/1745691615577794; Tversky, A., & Kahneman, D. (1981). Evidential impact of base rates. https://apps.dtic.mil/sti/html/tr/ADA099501/

[10]: Karvetski, C. W., Meinel, C., Maxwell, D. T., Lu, Y., Mellers, B. A., & Tetlock, P. E. (2022). What do forecasting rationales reveal about thinking patterns of top geopolitical forecasters? International Journal of Forecasting, 38(2), 688–704.

[11]: Benjamin, D. M., Morstatter, F., Abbas, A. E., Abeliuk, A., Atanasov, P., Bennett, S., Beger, A., Birari, S., Budescu, D. V., Catasta, M., Ferrara, E., Haravitch, L., Himmelstein, M., Hossain, K. T., Huang, Y., Jin, W., Joseph, R., Leskovec, J., Matsui, A., … Galstyan, A. (2023). Hybrid forecasting of geopolitical events. AI Magazine, 44(1), 112–128. https://doi.org/10.1002/aaai.12085

[12]: Green, K. C., & Armstrong, J. S. (2007). Structured analogies for forecasting. International Journal of Forecasting, 23(3), 365–376. https://doi.org/10.1016/j.ijforecast.2007.05.005

[13]: Mellers et al., Identifying and Cultivating

Superforecasters

[14]: Lawrence, M., Goodwin, P., O’Connor, M., & Önkal, D. (2006). Judgmental forecasting: A review of progress over the last 25 years. International Journal of Forecasting, 22(3), 493–518. https://doi.org/10.1016/j.ijforecast.2006.03.007

[15]: Mellers et al., Identifying and Cultivating

Superforecasters

[16]: Tetlock, Expert Political Judgment

[17]: Tetlock, Expert Political Judgment

[18]: Mellers et al., Identifying and Cultivating Superforecasters

[19]: Mellers et al., Identifying and Cultivating Superforecasters

[20]: Mellers et al., Identifying and Cultivating Superforecasters. Fluid intelligence was measured by the Ravens Advanced Progressive, and the Cogntive Refection Test measured their ability to reevaluate incorrect responses that look right at first glance.

[21]: Mellers et al., Identifying and Cultivating Superforecasters

[22]: Tetlock, Expert Political Judgment

[23]: Horowitz, M., Stewart, B. M., Tingley, D., Bishop, M., Resnick Samotin, L., Roberts, M., Chang, W., Mellers, B., & Tetlock, P. (2019). What Makes Foreign Policy Teams Tick: Explaining Variation in Group Performance at Geopolitical Forecasting. The Journal of Politics, 81(4), 1388–1404. https://doi.org/10.1086/704437; Mellers et al., Identifying and Cultivating Superforecasters; Mellers, B. A., McCoy, J. P., Lu, L., & Tetlock, P. E. (2024). Human and Algorithmic Predictions in Geopolitical Forecasting: Quantifying Uncertainty in Hard-to-Quantify Domains. Perspectives on Psychological Science, 19(5), 711–721. https://doi.org/10.1177/17456916231185339; Önkal, D., Lawrence, M., & Zeynep Sayım, K. (2011). Influence of differentiated roles on group forecasting accuracy. International Journal of Forecasting, 27(1), 50–68. https://doi.org/10.1016/j.ijforecast.2010.03.001

[24]: Horowitz et al., What Makes Foreign Policy Teams Tick

[25]: Silver, I., Mellers, B. A., & Tetlock, P. E. (2021). Wise teamwork: Collective confidence calibration predicts the effectiveness of group discussion. Journal of Experimental Social Psychology, 96, 104157. https://doi.org/10.1016/j.jesp.2021.104157

[26]: Buehler, R., Messervey, D., & Griffin, D. (2005). Collaborative planning and prediction: Does group discussion affect optimistic biases in time estimation? Organizational Behavior and Human Decision Processes, 97(1), 47–63

[27]: Önkal et al., Influence of differentiated roles on group forecasting accuracy

[28]: Önkal et al., Influence of differentiated roles on group forecasting accuracy

[29]: Chang, W., Atanasov, P., Patil, S., Mellers, B. A., & Tetlock, P. E. (2017). Accountability and adaptive performance under uncertainty: A long-term view. Judgment and Decision Making, 12(6), 610–626. https://doi.org/10.1017/S1930297500006732

[30]: Atanasov, P., Rescober, P., Stone, E., Swift, S. A., Servan-Schreiber, E., Tetlock, P., Ungar, L., & Mellers, B. (2017). Distilling the Wisdom of Crowds: Prediction Markets vs. Prediction Polls. Management Science, 63(3), 691–706. https://doi.org/10.1287/mnsc.2015.2374

[31]: McHugh, C. (2025, October 24). Pollsters Have a New Kind of Competitor. They Should Be Worried. POLITICO. https://www.politico.com/news/magazine/2025/10/24/political-betting-markets-political-predictions-accuracy-00620431

[32]: Gordon, T. J., & Helmer-Hirschberg, O. (1964). Report on a Long-Range Forecasting Study. RAND Corporation. https://www.rand.org/pubs/papers/P2982.html

[33]: Khodyakov, D., Grant, S., Kroger, J., & Bauman, M. (2023). RAND Methodological Guidance for Conducting and Critically Appraising Delphi Panels. RAND Corporation. https://www.rand.org/pubs/tools/TLA3082-1.html; Rescher, N. (1997). Predicting the future: An introduction to the theory of forecasting. State University of New York Press.

[34]: de Loë, R. C., Melnychuk, N., Murray, D., & Plummer, R. (2016). Advancing the State of Policy Delphi Practice: A Systematic Review Evaluating Methodological Evolution, Innovation, and Opportunities. Technological Forecasting and Social Change, 104, 78–88. https://doi.org/10.1016/j.techfore.2015.12.009; Linstone, H. A., & Turoff, M. (2011). Delphi: A brief look backward and forward. Technological Forecasting and Social Change, 78(9), 1712–1719. https://doi.org/10.1016/j.techfore.2010.09.011; Nasa, P., Jain, R., & Juneja, D. (2021). Delphi methodology in healthcare research: How to decide its appropriateness. World Journal of Methodology, 11(4), 116–129. https://doi.org/10.5662/wjm.v11.i4.116; Shang, Z. (2023). Use of Delphi in health sciences research: A narrative review. Medicine, 102(7), e32829. https://doi.org/10.1097/MD.0000000000032829

[35]: Khodyakov et al., RAND Methodological Guidance for Conducting and Critically Appraising Delphi Panels.

[36]: Woudenberg, F. (1991). An evaluation of Delphi. Technological Forecasting and Social Change, 40(2), 131–150. https://doi.org/10.1016/0040-1625(91)90002-W

[37]: Nasa et al., Delphi methodology in healthcare research; Shang, Use of Delphi in health sciences research

[38]: Dalkey, N., & Helmer, O. (1963). An Experimental

Application of the Delphi Method to the Use of Experts. Management

Science, 9(3), 458–467; de Loë, R. C., Melnychuk, N.,

Murray, D., & Plummer, R. (2016). Advancing the State of Policy

Delphi Practice: A Systematic Review Evaluating Methodological

Evolution, Innovation, and Opportunities. Technological Forecasting

and Social Change, 104, 78–88. https://doi.org/10.1016/j.techfore.2015.12.009; Drumm,

S., Bradley, C., & Moriarty, F. (2022). ‘More of an art than a

science’? The development, design and mechanics of the Delphi Technique.

Research in Social and Administrative Pharmacy, 18(1), 2230–2236. https://doi.org/10.1016/j.sapharm.2021.06.027

[39]: Khodyakov et al., RAND Methodological Guidance for

Conducting and Critically Appraising Delphi Panels. The studies

count is based on search results from the RAND website: https://www.rand.org/topics/delphi-method.html

[40]: Benjamin et al., Hybrid forecasting of geopolitical events

[41]: Logg, J. M., Minson, J. A., & Moore, D. A. (2019). Algorithm appreciation: People prefer algorithmic to human judgment. Organizational Behavior and Human Decision Processes, 151, 90–103. https://doi.org/10.1016/j.obhdp.2018.12.005

[42]: Lawrence et al., Judgmental forecasting

[43]: Blattberg, R. C., & Hoch, S. J. (1990). Database Models and Managerial Intuition: 50% Model + 50% Manager. Management Science, 36(8), 887–899. https://doi.org/10.1287/mnsc.36.8.887, as cited in Petropoulos, F., Fildes, R., & Goodwin, P. (2016). Do ‘big losses’ in judgmental adjustments to statistical forecasts affect experts’ behaviour? European Journal of Operational Research, 249(3), 842–852.

[44]: Petropoulos et al., Do ‘big losses’ in judgmental adjustments to statistical forecasts affect experts’ behaviour?

[45]: Wang, X., & Petropoulos, F. (2016). To select or to combine? The inventory performance of model and expert forecasts. International Journal of Production Research, 54(17), 5271–5282. https://doi.org/10.1080/00207543.2016.1167983; Franses, P. H., & Legerstee, R. (2011). Combining SKU-level sales forecasts from models and experts. Expert Systems with Applications, 38(3), 2365–2370.

[46]: Chang, W., Chen, E., Mellers, B., & Tetlock, P. (2016). Developing expert political judgment: The impact of training and practice on judgmental accuracy in geopolitical forecasting tournaments. Judgment and Decision Making, 11(5), 509–526. https://doi.org/10.1017/S1930297500004599; Kahneman & Tversky, On the psychology of prediction; Mellers et al., Identifying and Cultivating Superforecasters; Tversky & Kahneman, Evidential impact of base rates.

[47]: Schoenegger, P., Tuminauskaite, I., Park, P. S., Bastos, R. V. S., & Tetlock, P. E. (2024). Wisdom of the silicon crowd: LLM ensemble prediction capabilities rival human crowd accuracy. Science Advances, 10(45), eadp1528. https://doi.org/10.1126/sciadv.adp1528

[48]: Metaculus Cup Summer 2025. Retrieved October 15, 2025, from https://www.metaculus.com/tournament/metaculus-cup-summer-2025/?page=1

[49]: Ostrovsky, N. (2025, September 18). AI Is Learning to Predict the Future—And Beating Humans at It. TIME. https://time.com/7318577/ai-model-forecasting-predict-future-metaculus/

[50]: Karger, E., Bastani, H., Yueh-Han, C., Jacobs, Z., Halawi, D., Zhang, F., & Tetlock, P. E. (2025). ForecastBench: A Dynamic Benchmark of AI Forecasting Capabilities (No. arXiv:2409.19839). arXiv. https://doi.org/10.48550/arXiv.2409.19839

[51]: Schoenegger, P., Park, P. S., Karger, E., Trott, S., & Tetlock, P. E. (2025). AI-Augmented Predictions: LLM Assistants Improve Human Forecasting Accuracy. ACM Trans. Interact. Intell. Syst., 15(1), 4:1-4:25. https://doi.org/10.1145/3707649

[52]: Knack, A., Balakrishnan, N., & Clancy, T. (2025). Applying AI to Strategic Warning. https://cetas.turing.ac.uk/publications/applying-ai-strategic-warning

[53]: Allen, A., Markou, S., Tebbutt, W., Requeima, J., Bruinsma, W. P., Andersson, T. R., Herzog, M., Lane, N. D., Chantry, M., Hosking, J. S., & Turner, R. E. (2025). End-to-end data-driven weather prediction. Nature, 641(8065), 1172–1179. https://doi.org/10.1038/s41586-025-08897-0

[54]: Petropoulos, F., Kourentzes, N., Nikolopoulos, K., & Siemsen, E. (2018). Judgmental selection of forecasting models. Journal of Operations Management, 60, 34–46. https://doi.org/10.1016/j.jom.2018.05.005

[55]: Benjamin et al., Hybrid forecasting of geopolitical events

[56]: Those four variables were regime type, with five levels from full autocracy to full democracy, infant mortality, armed conflict in 4 or more neighboring states, and state-led discrimination.

[57]: Goldstone, J. A., Bates, R. H., Epstein, D. L., Gurr, T. R., Lustik, M. B., Marshall, M. G., Ulfelder, J., & Woodward, M. (2010). A Global Model for Forecasting Political Instability. American Journal of Political Science, 54(1), 190–208.

[58]: Bowlsby, D., Chenoweth, E., Hendrix, C., & Moyer, J. D. (2020). The Future is a Moving Target: Predicting Political Instability. British Journal of Political Science, 50(4), 1405–1417. https://doi.org/10.1017/S0007123418000443

[59]: O’Brien, S. P. (2010). Crisis Early Warning and Decision Support: Contemporary Approaches and Thoughts on Future Research. International Studies Review, 12(1), 87–104. https://doi.org/10.1111/j.1468-2486.2009.00914.x

[60]: The Violence & Impacts Early-Warning System.

(n.d.). VIEWS. Retrieved October 11, 2025, from https://viewsforecasting.org. The grid squares are based

on longitude and latitude. They are 0.5 x 0.5 decimal degrees, about 55

x 55 km at the equator.

[61]: Vesco, P., Hegre, H., Colaresi, M., Jansen, R. B., Lo, A., Reisch, G., & Weidmann, N. B. (2022). United they stand: Findings from an escalation prediction competition. International Interactions, 48(4), 860–896. https://doi.org/10.1080/03050629.2022.2029856

[62]: The Violence & Impacts Early-Warning System. (n.d.). VIEWS. Interactive data visualisation tool. Retrieved November 19, 2025, from https://data.viewsforecasting.org/.

[63]: Early Warning Project. Retrieved October 11, 2025, from https://earlywarningproject.ushmm.org

[64]: Early Warning Project. Statistical Model. Retrieved August 17, 2024, from https://earlywarningproject.ushmm.org/methodology-statistical-model

[65]: Early Warning Project. (2024). Countries at Risk for Intrastate Mass Killing 2024–25: Statistical Risk Assessment Results. https://earlywarningproject.ushmm.org/storage/resources/3186/Early%20Warning%20Project%20Statistical%20Risk%20Assessment%202024-25.pdf

[66]: Early Warning Project. (2024). Summary Handout | Countries at Risk for Intrastate Mass Killing 2024–25: Early Warning Project Statistical Risk Assessment Results. https://earlywarningproject.ushmm.org/reports/summary-handout-countries-at-risk-for-intrastate-mass-killing-2024-25-early-warning-project-statistical-risk-assessment-results

[67]: Fildes, R., Goodwin, P., Lawrence, M., & Nikolopoulos, K. (2009). Effective forecasting and judgmental adjustments: An empirical evaluation and strategies for improvement in supply-chain planning. International Journal of Forecasting, 25(1), 3–23.

[68]: del Pino, J. S. (2024). Why foresight? European Public Mosaic (EPuM). Open Journal on Public Service, p. 27. Others give foresight a broader definition. For example, “The term ‘Foresight’ is often used to refer to the application of specific tools or methods for conducting Futures work.” Government Office for Science. (2021). A brief guide to futures thinking and foresight.

[69]: Government of Canada. (2024). Module 1: Introduction to Foresight. https://horizons.service.canada.ca/en/our-work/learning-materials/foresight-training-manual-module-1-introduction-to-foresight/index.shtml

[70]: Gardner, A. L., Bontoux, L., & Barela, E. (2024). Applying evaluation thinking and practice to foresight evaluation. Association of Professional Futurists.

[71]: Office of Management and Budget. (2024). Circular No. A-11: Preparation, Submission, and Execution of the Budget. https://www.whitehouse.gov/wp-content/uploads/2018/06/a11.pdf

[72]: del Pino, J. S. (2024). Why foresight? European Public

Mosaic (EPuM). Open Journal on Public Service, p. 27.

[73]: Rohrbeck, R., Battistella, C., & Huizingh, E. (2015). Corporate foresight: An emerging field with a rich tradition. Technological Forecasting and Social Change, 101, 1–9. https://doi.org/10.1016/j.techfore.2015.11.002; Rohrbeck, R., Kum, M. E., Jissink, T., & Gordon, A. V. (2018). How leading firms build a superior position in markets of the future Corporate Foresight Benchmarking Report. Strategic Foresight Research Network. https://pure.au.dk/ws/files/131917199/Corporate_Foresight_Benchmarking_Report_2018_electronic_version.pdf

[74]: FEMA. (2024). Strategic Foresight 2050 Final Report. https://www.fema.gov/sites/default/files/documents/fema_strategic-foresight-2050-final-report.pdf, p. 5; Government Office for Science, A brief guide to futures thinking and foresight.

[75]: Davenport, A. C., Ziegler, M. D., Resetar, S. A., Savitz, S., Anania, K., Bauman, M., & McDonald, K. (2022). USCG Project Evergreen V: Compilation of Activities and Summary of Results. https://www.rand.org/pubs/research_reports/RRA872-2.html

[76]: Schwarz, J. O. (2023). Strategic foresight: An introductory guide to practice. Routledge; Government of Canada, Module 1

[77]: FEMA, Strategic Foresight 2050 Final Report.

[78]: National Intelligence Council. (2021). Global Trends 2040: A More Contested World. https://www.dni.gov/files/images/globalTrends/GT2040/GlobalTrends_2040_for_web1.pdf

[79]: Government of Canada, Module 1; Government Office for Science, A brief guide to futures thinking and foresight; FEMA, Strategic Foresight 2050 Final Report.

[80]: Government Office for Science. (2024). The Futures Toolkit. https://assets.publishing.service.gov.uk/media/66c4493f057d859c0e8fa778/futures-toolkit-edition-2.pdf

[81]: Government Office for Science, A brief guide to futures thinking and foresight.

[82]: Government Office for Science, The Futures Toolkit

[83]: Government of Canada, Module 1

[84]: Government Office for Science, The Futures Toolkit

[85]: Cuhls, K. E. (2020). Horizon Scanning in Foresight – Why Horizon Scanning is only a part of the game. FUTURES & FORESIGHT SCIENCE, 2(1), e23. https://doi.org/10.1002/ffo2.23; Hines, P., Yu, L. H., Guy, R. H., Brand, A., & Papaluca-Amati, M. (2019). Scanning the horizon: A systematic literature review of methodologies. BMJ Open, 9(5), e026764. https://doi.org/10.1136/bmjopen-2018-026764

[86]: Boden, J. M., Cagnin, C., Carabias, B. V., Haegeman, K., & Könnölä, T.-T. T. (2011). Facing the Future: Time for the EU to meet Global Challenges. https://doi.org/10.2791/4223; Könnölä, T., Salo, A., Cagnin, C., Carabias, V., & Vilkkumaa, E. (2012). Facing the future: Scanning, synthesizing and sense-making in horizon scanning. Science and Public Policy, 39(2), 222–231. https://doi.org/10.1093/scipol/scs021

[87]: FEMA, Strategic Foresight 2050 Final Report; Government of Canada. (2024). Module 6: Scenarios and Results. https://horizons.service.canada.ca/en/our-work/learning-materials/foresight-training-manual-module-6-scenarios-and-results/index.shtml; Government Office for Science, A brief guide to futures thinking and foresight; Schwarz, Strategic foresight

[88]: Barma, N. H., Durbin, B., Lorber, E., & Whitlark, R. E. (2016). “Imagine a World in Which”: Using Scenarios in Political Science 1. International Studies Perspectives, 17(2), 117–135. https://doi.org/10.1093/isp/ekv005

[89]: Government of Canada, Module 6; Government Office for Science, The Futures Toolkit

[90]: FEMA, Strategic Foresight 2050 Final Report; Government Office for Science, The Futures Toolkit

[91]: FEMA, Strategic Foresight 2050 Final Report

[92]: FEMA, Strategic Foresight 2050 Final Report

[93]: Schwarz, Strategic foresight

[94]: Government Office for Science, A brief guide to futures thinking and foresight.

[95]: Lempert, R. J., Popper, S. W., Groves, D. G., Kalra, N., Fischbach, J. R., Bankes, S. C., Bryant, B. P., Collins, M. T., Keller, K., Hackbarth, A., Dixon, L., LaTourrette, T., Reville, R. T., Hall, J. W., Mijere, C., & McInerney, D. J. (2013). Making Good Decisions Without Predictions: Robust Decision Making for Planning Under Deep Uncertainty. https://www.rand.org/pubs/research_briefs/RB9701.html

[96]: Lempert, R. J. (2019). Robust Decision Making (RDM). In V. A. W. J. Marchau, W. E. Walker, P. J. T. M. Bloemen, & S. W. Popper (Eds.), Decision Making under Deep Uncertainty: From Theory to Practice (pp. 23–51). Springer International Publishing. https://doi.org/10.1007/978-3-030-05252-2_2

[97]: Lempert, Robust Decision Making

[98]: SEC. (2010). 1161 Final. Commission Staff Working Document A Rationale for Action, Accompanying the Europe 2020 Flagship Initiative Innovation Union COM(2010) (pp. 9 and 89). http://ec.europa.eu/research/innovation-union/pdf/rationale_en.pdf, as cited in Könnölä et al., Facing the future

[99]: Gardner et al., Applying evaluation thinking and practice to foresight evaluation

[100]: Fergnani, A., & Chermack, T. J. (2021). The resistance to scientific theory in futures and foresight, and what to do about it. Futures & Foresight Science, 3(3–4), e61. https://doi.org/10.1002/ffo2.61

[101]: Rohrbeck, R., & Kum, M. E. (2018). Corporate foresight and its impact on firm performance: A longitudinal analysis. Technological Forecasting and Social Change, 129, 105–116. https://doi.org/10.1016/j.techfore.2017.12.013

[102]: Rohrbeck, R., & Kum, M. E. (2018). Corporate foresight and its impact on firm performance: A longitudinal analysis. Technological Forecasting and Social Change, 129, 105–116. https://doi.org/10.1016/j.techfore.2017.12.013

[103]: Rohrbeck, R., & Schwarz, J. O. (2013). The value

contribution of strategic foresight: Insights from an empirical study of

large European companies. Technological Forecasting and Social

Change, 80(8), 1593–1606. https://doi.org/10.1016/j.techfore.2013.01.004.

Percentages are how many responded “yes” vs. “partly”, “no”, or “don’t

know”. Top performers are the 12 firms with the highest growth rate in

the last three years in the sample; low performers are the 12 with the

lowest growth rate.

[104]: Burt, G., & Nair, A. K. (2020). Rigidities of imagination in scenario planning: Strategic foresight through ‘Unlearning.’ Technological Forecasting and Social Change, 153, 119927.

[105]: Schwarz, J. O., Rohrbeck, R., & Wach, B. (2020). Corporate foresight as a microfoundation of dynamic capabilities. Futures & Foresight Science, 2(2), e28. https://doi.org/10.1002/ffo2.28

[106]: Greenblott, J. M., O’Farrell, T., Olson, R., & Burchard, B. (2019). Strategic Foresight in the Federal Government: A Survey of Methods, Resources, and Institutional Arrangements. World Futures Review, 11(3), 245–266. https://doi.org/10.1177/1946756718814908; Scoblic, J. P. (2021). Strategic Foresight in U.S. Agencies. New America. http://newamerica.org/future-security/reports/strategic-foresight-in-us-agencies/

[107]: Foreign Affairs Manual § 090 Vol. 2. (2024). https://fam.state.gov/FAM/02FAM/02FAM0090.html

[108]: After Action Review on Afghanistan. (2022). U.S. Department of State. https://www.state.gov/wp-content/uploads/2023/06/State-AAR-AFG.pdf; Blinken, A. (2024, October 30). American Diplomacy for a New Era. https://geneva.usmission.gov/2024/10/30/american-diplomacy-for-a-new-era/

[109]: Office of Personnel Management (OPM). (2022). Developing & Applying Strategic Foresight for Better Human Capital Management. Government Printing Office. https://www.opm.gov/policy-data-oversight/human-capital-management/strategic-foresight/

[110]: Scoblic, Strategic Foresight in U.S. Agencies

[111]: Scoblic, Strategic Foresight in U.S. Agencies

[112]: Rijkens-Klomp, N., & Van Der Duin, P. (2014). Evaluating local and national public foresight studies from a user perspective. Futures, 59, 18–26. https://doi.org/10.1016/j.futures.2014.01.010

[113]: Menzies, M. B., & Middleton, L. (2019). Evaluating Health Futures in Aotearoa. World Futures Review, 11(4), 379–395. https://doi.org/10.1177/1946756719862114

[114]: Phadnis, S., Caplice, C., Sheffi, Y., & Singh, M. (2015). Effect of scenario planning on field experts’ judgment of long-range investment decisions: Effect of Scenario Planning. Strategic Management Journal, 36(9), 1401–1411. https://doi.org/10.1002/smj.2293

[115]: Totin, E., Butler, J. R., Sidibé, A., Partey, S., Thornton, P. K., & Tabo, R. (2018). Can scenario planning catalyse transformational change? Evaluating a climate change policy case study in Mali. Futures, 96, 44–56. https://doi.org/10.1016/j.futures.2017.11.005

[116]: Association of Professional Futurists. (2022). APF Foresight Evaluation Task Force Report: Building field and foresight practitioner evaluation capacity. https://www.apf.org/product-page/apf-foresight-evaluation-task-force-report

[117]: Chen, K.-H., & Hsu, L.-P. (2020). Visioning the future:

Evaluating learning outcomes and impacts of futures-oriented education.

Journal of Futures Studies, 24(4), 103–116. Results

are based on an analysis ofbased on 20-questions divided into 5 factors.

Differences for individual questions are unreported.

[118]: Mengel, T. (2019). Learning Portfolios as Means of Evaluating Futures Learning: A Case Study at Renaissance College. World Futures Review, 11(4), 360–378. https://doi.org/10.1177/1946756719851522